The big data challenge

Big data continues to grow exponentially, and data management technologies are changing rapidly to meet the demands. Data storage options continually evolve with options for relational versus graph databases and hosted versus cloud, not to mention the many different data formats and query languages that come with these data stores.

Modern enterprises struggle to manage their data stores efficiently and cost-effectively, and in many cases must continue to maintain legacy systems that use older data storage technology. The problem is compounded by the need for businesses to understand and utilize the data contained in these various systems to their competitive advantage.

Uncovering the important data in these data silos requires purpose-built techniques and tools to consolidate and enable efficient and improved decision-making.

Data federation and data integration: A powerful solution to the challenge

Tom Sawyer Software has spent more than three decades working with data and providing solutions that address the data silo issue.

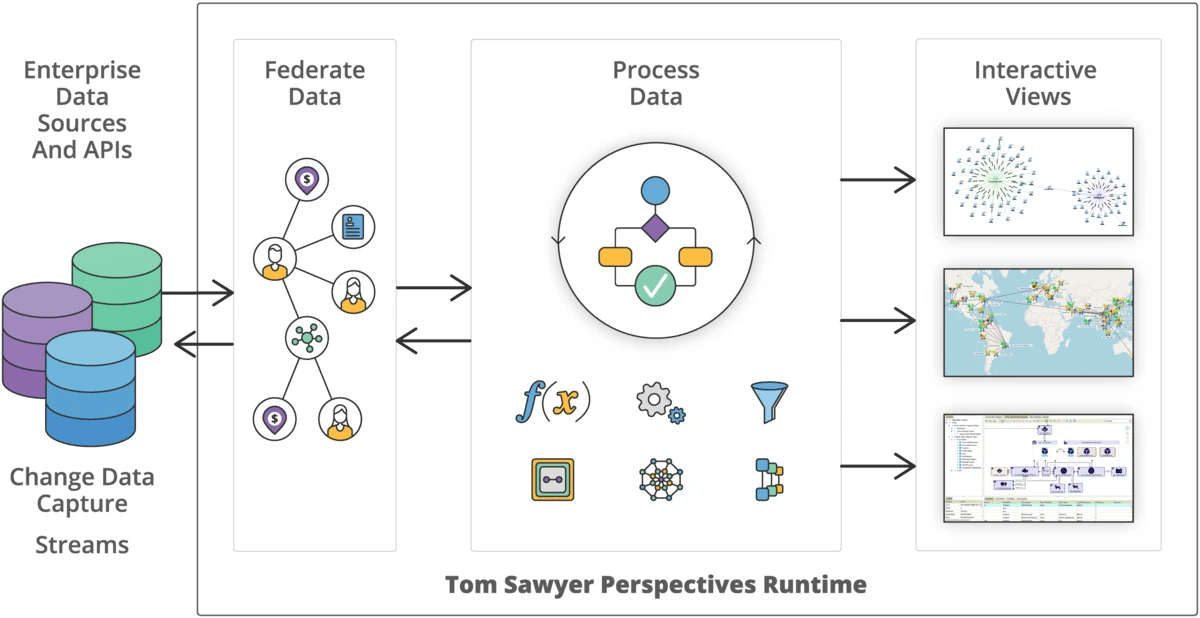

Our mature data integration platform, Perspectives, includes data federation and data integration capabilities that combine data residing in different data sources and with different data structures to provide enterprises with real-time access to a unified view of the data.

And we don't stop there. Perspectives can reveal valuable insights through powerful visualizations and analysis.

What do we mean by data federation and data integration?

Data Federation

Data federation allows for accessing and querying data from different sources as if they were stored in a single database, without physically moving or copying the data.

Data Integration

Data integration is the process of ingesting data from one or more external data sources into a single, unified in-memory graph model.

Benefits of federating and integrating data

Informed decision-making

Having a consolidated view of data from different systems provides a single source of truth for accurate decision-making.

New insights exposed

Using integrated data, you can apply specialized visualization and analysis techniques to expose interesting and previously unseen results.

Increased productivity

Data consumers have a single source of truth to consult, enabling them to make mission critical decisions quickly and accurately.

Improved data agility

Eliminating latency concerns and the need for data migration or synchronization, data can be accessed and queried in real-time to improve data agility and flexibility.

Cost- and time-savings

Reduce the dependency on IT teams for developing custom data integrations or moving data around.

Eliminate data redundancy

No need to move or copy data to a central data lake or data warehouse, which can lead to consistency and accuracy issues.

10

relational databases

9

graph databases

7

data formats

Supported data integrators

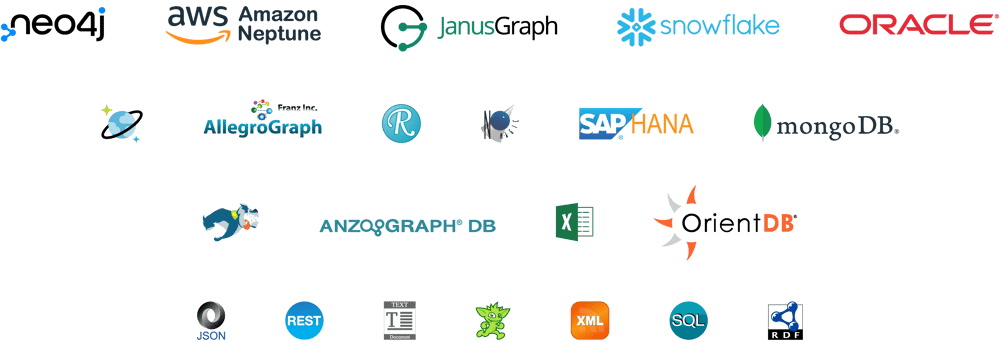

The Perspectives platform is data agnostic. It includes a comprehensive set of data integrators that can populate a model from a data source. Some integrators are a bidirectional bridge between a data source and a model, and support writing model data back to the data source.

Perspectives integrators can access data in one or more of these sources:

- Graph databases such as Neo4j and Amazon Neptune openCypher

- Graph databases supported by the Apache TinkerPop framework such as Amazon Neptune Gremlin, Microsoft Azure Cosmos DB, JanusGraph, OrientDB, and TinkerGraph

- Microsoft Excel

- MongoDB databases

- JSON files

- RDF sources such as Oracle, Stardog, AllegroGraph, and MarkLogic databases and RDF-formatted files

- RESTful API servers

- SQL JDBC-compliant databases

- Structured text documents

- XML

Data federation and data integration are easy with Perspectives

Whether you have a traditional relational database, a schema-less graph database or RESTful API, the data federation and integration process is easy with Perspectives.

For relational databases

The data integration process is easy for relational databases. Follow these three simple steps, then repeat as needed.

Connect to your data source

Use the provided integrators to connect to your data source.

Extract the schema

Use automatic schema extraction to read the structure of your data and pull out the metadata information automatically. Use the schema editor to view and manually adjust the Perspectives schema to match your application needs.

Bind the data

Bind the data source and the schema. This process determines how many model elements of each model element type are created in the model, and determines the values of all attributes in the model.

Repeat

Repeat the steps to connect to and federate data from as many data sources as you need.

For schema-less graph databases

For graph databases, Perspectives supports automatic binding by default. This means you can use a query to preview the database content and then visualize the data in a drawing view without manually creating a schema and defining data bindings.

The new dynamic data integration tool in Tom Sawyer Perspectives 10.0 allows you to integrate your data in about 20 seconds. From there you can springboard from a Cypher or Gremlin-compatible database to a fully customized, interactive visualization application in only 100 seconds more!

Neo4j integrator

- Element bindings correspond to a read Cypher query.

- Attribute bindings correspond to a column in the result set of the Cypher query.

Neptune Gremlin integrator

The Neptune Gremlin integrator populates a model from an Amazon Neptune database that supports a Property Graph model. The Neptune integrator, automatically creates a Tom Sawyer Perspectives schema where the data bindings work as follows:

- Element bindings correspond to a Gremlin query.

- Attribute bindings correspond to a column in the result set of the Gremlin query.

Neptune openCypher integrator

- Element bindings correspond to a read Cypher query.

- Attribute bindings correspond to a column in the result set of the Cypher query.

TinkerPop integrator

- Element bindings correspond to a Gremlin query.

- Attribute bindings correspond to a column in the result set of the Gremlin query.

Element Bindings

Element bindings correspond to:

- A URL, either full or relative to the base URL. The value of a URL is defined by an expression.

- A request method, one of GET, POST, HEAD, OPTIONS, PUT, DELETE, or TRACE.

- An optional request body. The value of a request body is defined by an expression.

- An absolute XPath location path. An XPath location path uses XML tags to identify locations within the XML response.

Attribute Bindings

Attribute bindings correspond to a relative path starting from the location path for element bindings.

Writing back changes to the data source

Perspectives supports the full data journey, from federation and integration of your data, graph visualization, and graph editing, to writing back changes to the data source.

Support for most data sources

Perspectives can write data (commit changes) back to a Neo4j, Amazon Neptune, Apache TinkerPop, JanusGraph, or OrientDB database, or an RDF, Excel, SQL, text, or XML data source.

Worry-free integration

You don't need to worry about the various nuances and integration problems of each platform. Perspectives automatically handles that for you.

Maintains data integrity

During commit, Perspectives ensures data integrity.

Conflict detection and resolution

Perspectives detects and resolves conflicts during update and commit operations. A conflict occurs when the same object with the same identifier has mismatched values in the data source and in the model.

Commit configuration

With Perspectives, commit configuration is convenient and easy putting you in control of which changes to commit. By default, automatic bindings for Neo4j, Neptune Gremlin, Neptune openCypher, OrientDB, and TinkerPop integrators conveniently handle the commit operations to automatically save all changes. But you can choose to exclude any attributes from being committed. And the system supports regular expressions.

Learn more about the graph editing capabilities in Perspectives.

The benefits of data commit are clear

Perspectives provides all the advantages of model persistence while eliminating the pains associated with it.

Real-Time Updates

By writing changes directly to the original data source, you can provide real-time updates to the data without delays. This is particularly important in applications where up-to-date information is critical, such as financial systems, real-time monitoring, or collaborative editing tools.

Data Consistency

Writing data back helps maintain data consistency. When you update the data source immediately after modifying it, you ensure that all users or components working with that data see the same changes. This prevents data discrepancies or conflicts.

Historical Tracking

Committing changes in the original data source can provide a complete historical record of all modifications made to the data. This audit trail can be invaluable for debugging, compliance, and accountability purposes.

Scalability

In distributed systems, writing changes back to the original source can distribute the data updates across multiple nodes, improving the scalability and load balancing of your application.

Simplified Recovery

If a commit process fails midway, you can use the recorded changes to recover and reapply updates, ensuring data integrity.

Ease of Collaboration

When multiple users or systems interact with the same data source, writing changes back simplifies collaboration. Everyone sees the same data state, reducing confusion and conflicts.

Reduced Latency

In cases where data retrieval is time-consuming (e.g., fetching data from external APIs or databases), persisting changes locally can reduce latency by avoiding repetitive data fetches.

Easier Rollbacks

If a change leads to unexpected results or errors, reverting to the previous data state is more straightforward when changes are already stored in the original source.

Adherence to Data Policies

In scenarios with strict data governance or compliance requirements, writing changes back to the original data source ensures that data policies and access controls are consistently enforced.

Applying visualization and analysis to your data

Once your integrators are configured in Perspectives, the data is loaded into our in-memory native graph model which makes it effective and efficient to work with the data.

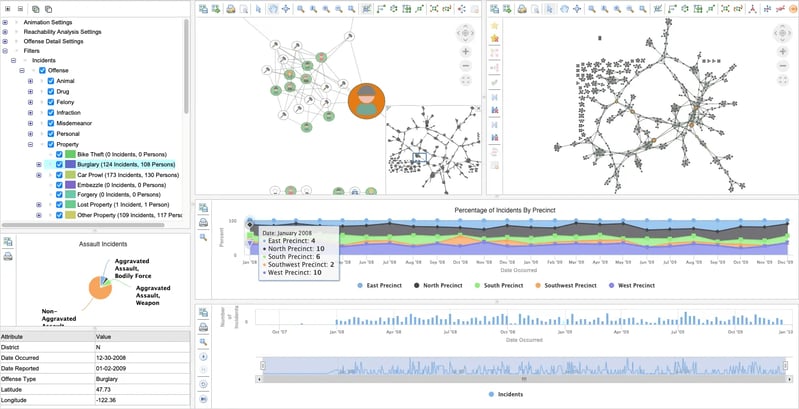

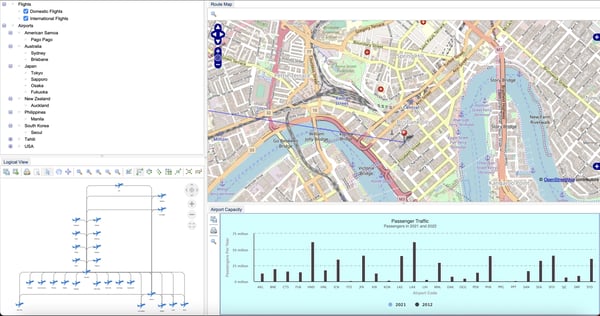

Perspectives makes it easy to design and configure an end-user web or desktop application that utilizes the graph model so your users can access the data in real-time. You configure easy-to-understand views of the data including graph drawings, tables, charts, timelines, maps and more. You can also incorporate powerful analytics into the resulting application so users gain even more insight into the data.

Watch this video to see how easy it is to configure a dashboard-style layout of views for your Perspectives application:

An example application created with Perspectives showing a dashboard layout with drawing, map, tree, and chart views.

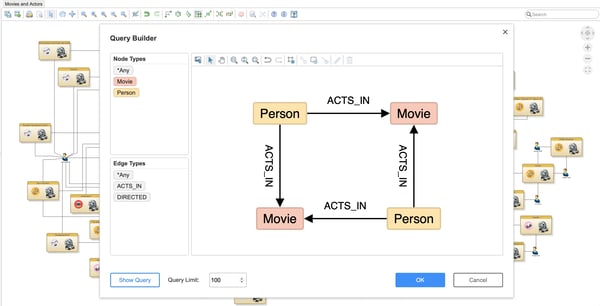

Query graph databases without the need to know Gremlin or Cypher.

Eliminate the need to know complex query languages

You may not know every query that users will want to perform, that's why we created the query builder, which allows non-technical users to interact with graph database data without the need to know Gremlin or Cypher. The advanced, visual query builder allows users to search for matching patterns in their graphs.

Read our blog to learn more about query builder.

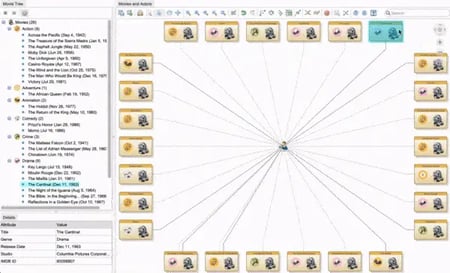

Improve data navigation and analysis with load neighbors

Load neighbors is an innovative tool that can greatly improve the data navigation and analysis experience for end users. With load neighbors, users can explore their data more effectively, saving them valuable time and allowing them to focus on their most important tasks. Load neighbors allows users to load data incrementally based on their use case. As a result, users can gain insights and make faster decisions, which is essential in today’s fast-paced business world.

Learn more about the load neighbors feature.

An example of load neighbors being used in Perspectives.

TECHNOLOGIES

Copyright © 2024 Tom Sawyer Software. All rights reserved. | Terms of Use | Privacy Policy

Copyright © 2024 Tom Sawyer Software.

All rights reserved. | Terms of Use | Privacy Policy