Tom Sawyer Data Streams for Real-Time Knowledge Graph Modeling

ETL structured and unstructured data into a governed, real-time knowledge graph

Tom Sawyer Data Streams is a schema-driven platform to ETL your structured and unstructured data into a single, governed, query-ready knowledge graph. Subscribe to Apache Kafka (or Confluent) topics sourced from databases, files, and APIs; apply precise transformations and filters; then run flows continuously to normalize, enrich, and link changing streams in real time. Persist the knowledge graph in a graph database for scalable sharing and downstream analysis. Integrate with existing pipelines and catalogs, and use the graph as a high-quality context layer for AI, including RAG and reasoning.

Tom Sawyer Data Streams reduces integration efforts across legacy and streaming systems, fits existing pipelines and tools, and delivers a complete, accurate picture for lineage, impact analysis, and operational decisions.

Watch this short introduction to Data Streams for unifying data into a governed, real-time knowledge graph.

Data Streams 1.0 available now

Now generally available, Tom Sawyer Data Streams 1.0 continuously ETLs structured and unstructured data into a governed, query-ready knowledge graph from Kafka, databases, files, and APIs, reducing integration effort and enabling scalable analytics and AI.

Designed for enterprise data architects

Enterprises face fragmented sources, legacy systems, and real-time demands. Tom Sawyer Data Streams supports a range of high-impact scenarios for data architects and AI platform engineers, from migration and continuous sync to validating generative AI results and integrating agentic results into a single-source-of-truth database.

Discover how Data Streams addresses common challenges with repeatable patterns and proven tooling.

One-Time Data ETL

ETL legacy relational models to a governed, query-ready knowledge graph. Introspect the source schema, design visual flows to rename, merge or split tables, convert properties into edges, and execute once to materialize the analytics-ready knowledge graph without expensive data migrations.

Continuous Sync / Change Data Capture (CDC) to Graph

Keep operational systems and streams in lockstep with an always-current knowledge graph. Ingest Kafka topics and database changes, apply SpEL transformations and filters, and continuously normalize, enrich, and link records as they arrive.

AI Workflows

Improve LLM accuracy by feeding normalized events into feature stores, embeddings, and graph-backed retrieval. Then validate outputs against authoritative nodes and lineage, and write agent results back to keep a single source of truth.

Entity Resolution and Master Data

Create golden entities across silos to power consistent analytics and operations. Normalize identifiers, apply matching rules in SpEL, and persist deduplicated nodes and relationships for trusted 360° views.

Fraud and Risk Signal Fusion

Fuse transactions, accounts, devices, and geolocation to surface suspicious patterns in real time. Materialize relationship signals like shared devices or rings, enrich events in flow, and route high-confidence alerts downstream.

Data Catalogue, Lineage, and Governance

Consolidate schemas, datasets, policies, and lineage edges to accelerate impact analysis and compliance. Track how data moves, who uses it, and which rules apply, with the graph persisted in a graph database for broad sharing and auditability.

Getting started with Tom Sawyer Data Streams

Connecting to your topics and building your knowledge graph model is made simple with Data Streams. Simply connect to your streams and apply transformations using the visual data flow editor.

View the resulting schema in an easily understandable graph drawing or tree view.

1. Connect to Kafka Topics

Enter the connection details to connect to the Kafka topics of interest.2. Apply Transformations

Transform topics to convert them from nodes to edges. Rename topics for consistency and clarity.

3. Visualize and Validate the Schema

View the resulting schema as you work. See a graph or tree view of the schema.

4. Save and Deploy

Save your knowledge graph model to a graph database Sink and deploy the streams for continuous execution.

ETL data in real-time from structured and unstructured data sources

Tom Sawyer Data Streams ETLs both structured and unstructured data by subscribing to Apache Kafka (or Confluent) topics you provision. It then transforms and persists those events into your knowledge graph model. Use your tool of choice, such as Kafka Connect, Change Data Capture tools, or custom producers, to publish from your system of record and subscribe to the topic in Data Streams. You control which topics represent nodes, edges, or attributes, and you can secure access with existing Kafka authentication and encryption settings.

With Kafka, you can create topics from almost any system and feed them into Data Streams, including:

- Relational databases - PostgreSQL (including through a Debezium connector), MySQL, SQL Server, Oracle.

- NoSQL/search - MongoDB, Cassandra, Elasticsearch.

- Graph/datastores - Neo4j, JanusGraph, RDF/SPARQL endpoints.

- Warehouses - Snowflake, BigQuery, Redshift.

- Files/object storage - CSV/JSON from S3, Azure Blob, GCS.

- Applications/APIs - Salesforce, ServiceNow, Jira, SAP, Dynamics.

- Log/IoT/event sources - syslog, MQTT, sensors, webhooks.

![]()

Create Kafka topics from your data sources for easy integration and transformation in Data Streams.

Visually design and validate your data flows

Tom Sawyer Data Stream's intuitive web-based designer lets you assemble sources, transformations, conditions, and sinks to build and monitor data flows—accelerating delivery while reducing custom code.

Automatic graph layout organizes complex pipelines for instant clarity, so you can iterate faster, validate logic visually, and promote changes with confidence, shortening the path from prototype to production.

Tom Sawyer Data Streams visual data flow editor makes it easy to build and validate your data flow.

Effortlessly apply transformations to topics

Transformations turn raw stream events into a coherent, query-ready model. Incoming topics enter the flow as nodes; you then promote the right connections by converting selected nodes into edges and standardize naming to match your domain vocabulary. The result is a knowledge graph model that reflects how things actually relate, not just how they were recorded upstream.

For technical teams, this approach reduces custom ETL operations and enforces consistency without heavy refactoring. You define intent visually, validate the evolving structure against your schema, and keep semantics stable across sources. Expressions (SpEL) give you precise, lightweight control while keeping the overall flow simple to reason about and easy to review.

With Data Streams transformations, it's easy to build the knowledge graph model you wish you had.

Persist data in a graph database

Data Streams persists the model to a graph database for durability, sharing, and governance. The stored graph becomes a query-ready foundation for analytics, visualization, and AI, and is compatible with existing pipelines, catalogs, and security controls. Versionable schemas and clear provenance help teams evolve models safely while maintaining an authoritative, auditable source of truth.

Authenticate and control access

Data Streams supports authentication with OAuth 2.0 and Keycloak to support secure, multi-user environments. Align with enterprise standards for access and encryption while maintaining a clean, auditable boundary around sensitive data.

Now that you have your knowledge graph model, what next?

Once your data flows are running, the resulting knowledge graph lives in your environment and fits into your existing infrastructure. Persisted in a graph database, the model plugs into your existing pipelines, catalogs, governance, AI workflows, and security policies. You can query the model directly, push extracts to warehouses, trigger downstream jobs, and integrate with AI, lineage, MDM, or observability tools.

The model and data are yours, so you can evolve schemas, add sources, and make version changes without vendor lock-in.

Apply graph power to your AI workflows

Tom Sawyer Data Streams supplies the structured, governed context that modern AI systems require. With your query-ready knowledge graph in place, AI teams gain a governed context layer for training, inference, and agent workflows.

The graph provides clean entities, explicit relationships, and provenance, which are ideal for AI feature engineering, retrieval/grounding, and constraint-aware prompts.

| Prepare and process data within AI pipelines: Normalize, enrich, and link events into structured entities and relationships suitable for feature stores, embeddings, and vector indexes. | |

| Support LLM (large language model) creation for improved accuracy: Provide graph-backed retrieval and grounding during training, fine-tuning, and RAG to reduce hallucinations and tighten answers. | |

| Validate generative AI results: Check outputs against authoritative nodes and edges, enforce policy constraints via graph rules, and trace impact through lineage for reproducible evaluation. | |

| Integrate agentic results into a single source of truth: Write agent plans, tool calls, and derived facts back into the governed graph to maintain a consolidated, provenance-rich record for ongoing learning and operations. |

Visualize your model with Tom Sawyer Software companion products

For added value, pair Data Streams with Tom Sawyer Software’s graph and data visualization and analysis products to explore, analyze, and communicate the value of the graph. Our best-in-class automatic graph layout quickly transforms complex connections into clean, easy-to-understand visualizations.

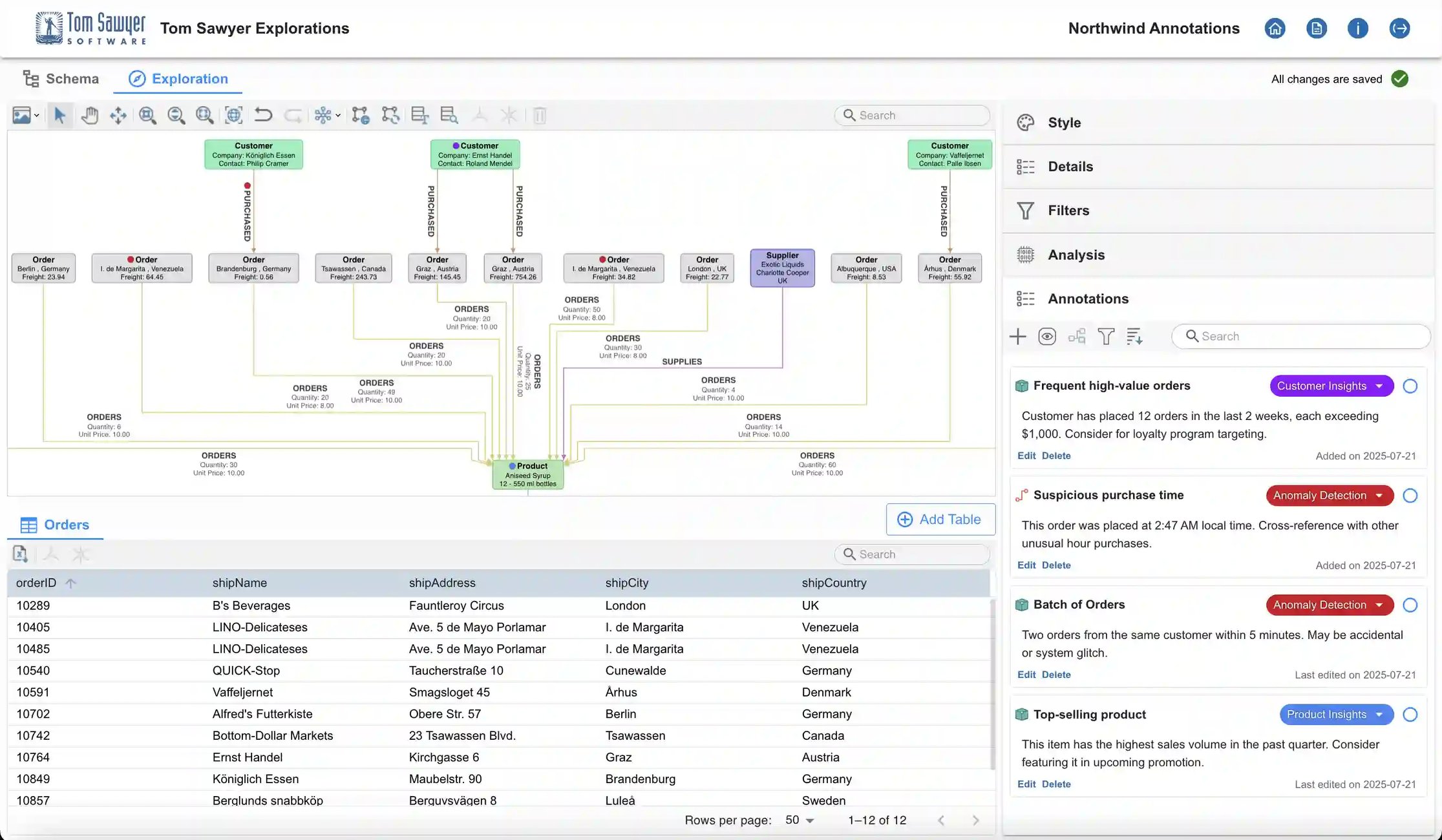

Tom Sawyer Explorations

Explorations is a no-code graph intelligence application for analysts of all levels to connect to graph databases, build queries visually without query language expertise, and instantly explore the results as an interactive knowledge graph. With built-in graph analysis algorithms, users can uncover hidden patterns and gain deeper insights effortlessly.

Tom Sawyer Perspectives

Perspectives is a powerful, low-code development platform for building standalone applications or embedding advanced data analysis and visualization into existing systems. Use advanced layouts, styling, and UX components to build custom apps and dashboards on top of your graph model.

The benefits of Tom Sawyer Data Streams are clear

Data Streams helps teams break through data silos so they can see the full picture. It provides a practical path to build a usable knowledge graph from disparate systems and streams, and to turn that graph into real-time insight for operations and analysis.

Unified View of Critical Data

Consolidate relational, NoSQL, files, APIs, and event streams via Kafka into one consistent, query-ready model.

Faster Time to Insight

Visual flow design and expressive transformations reduce custom ETL work and shorten integration cycles.

Real-Time Processing

Execute flows continuously to normalize, enrich, and link events as they arrive for timely decisions.

Higher Data Quality and Consistency

Schema-driven mapping, validation, and filtering produce cleaner entities and relationships.

Context-Rich Analytics

Model relationships for impact analysis, lineage tracing, and root-cause investigation.

Governance and Auditability

Persist the graph to a graph database for shared access, versionable schemas, and clear provenance.

Ownership and Portability

Your model and data live in your database, ready for use with existing pipelines, tools, and policies.

Secure Integration

Works with your Kafka authentication and encryption settings.

Incremental Adoption

Start with a single migration or CDC flow and expand as value is proven across teams.

TECHNOLOGIES

Copyright © 2026 Tom Sawyer Software. All rights reserved. | Terms of Use | Privacy Policy

Copyright © 2026 Tom Sawyer Software.

All rights reserved. | Terms of Use | Privacy Policy